Top 7 Tips for Ensuring Data Quality in Projects

Ensure data quality in projects with advanced strategies, from governance to culture, for accurate, complete, and reliable data-driven decisions.

What if a single error in your data could derail an entire project? If you’re not treating data quality as a top priority, you might be closer to that scenario than you think. We’ve all seen the damage poor data can cause - misguided decisions, wasted resources, and missed opportunities. When every decision relies on the quality of your data, it’s not enough for it to be only clean - it needs to be precise and complete. But how do you ensure your data is truly reliable, especially when the basics just don’t cut it anymore?

In this guide, I’ll walk you through seven advanced strategies to improve your data quality practices, ensuring your projects are built on a foundation of truly dependable data.

7 Key Tips for Ensuring Data Quality in Projects

1. Implement a Strong Data Governance Framework

To ensure data quality, you must start with a strong data governance framework. Without governance, your data management efforts will lack direction, consistency, and accountability.

How to Develop a Strong Data Governance Framework?

1. Start by clearly defining who owns and who is responsible for each piece of data. This isn’t just about assigning titles; it’s about accountability. The data owner, typically a senior-level executive, sets the policies and objectives for the data. Meanwhile, the data steward ensures these policies are followed, managing data quality daily. Establish a stewardship council that meets regularly to address any issues that arise and to refine the governance strategy as your data needs evolve.

2. Create detailed data policies that outline the rules for data management. These should include guidelines on data entry, validation, storage, and access. Your policies must be aligned with business objectives, ensuring that data supports your strategic goals. Implement standards across all departments to maintain consistency. This includes naming conventions, data formats, and quality metrics. Regular audits should be conducted to ensure compliance and to identify areas for improvement.

3. Use technology to implement your governance framework. Deploy data management platforms that automate policy enforcement and provide real-time insights into data quality. Tools like data catalogs and metadata management systems can help maintain order and transparency. By integrating these tools, you not only reduce human error but also create an environment where data governance is embedded into daily operations.

2. Advanced Data Cleansing Techniques

Basic data cleansing might suffice in simple projects, but when dealing with complex datasets, you need more sophisticated techniques to ensure data quality.

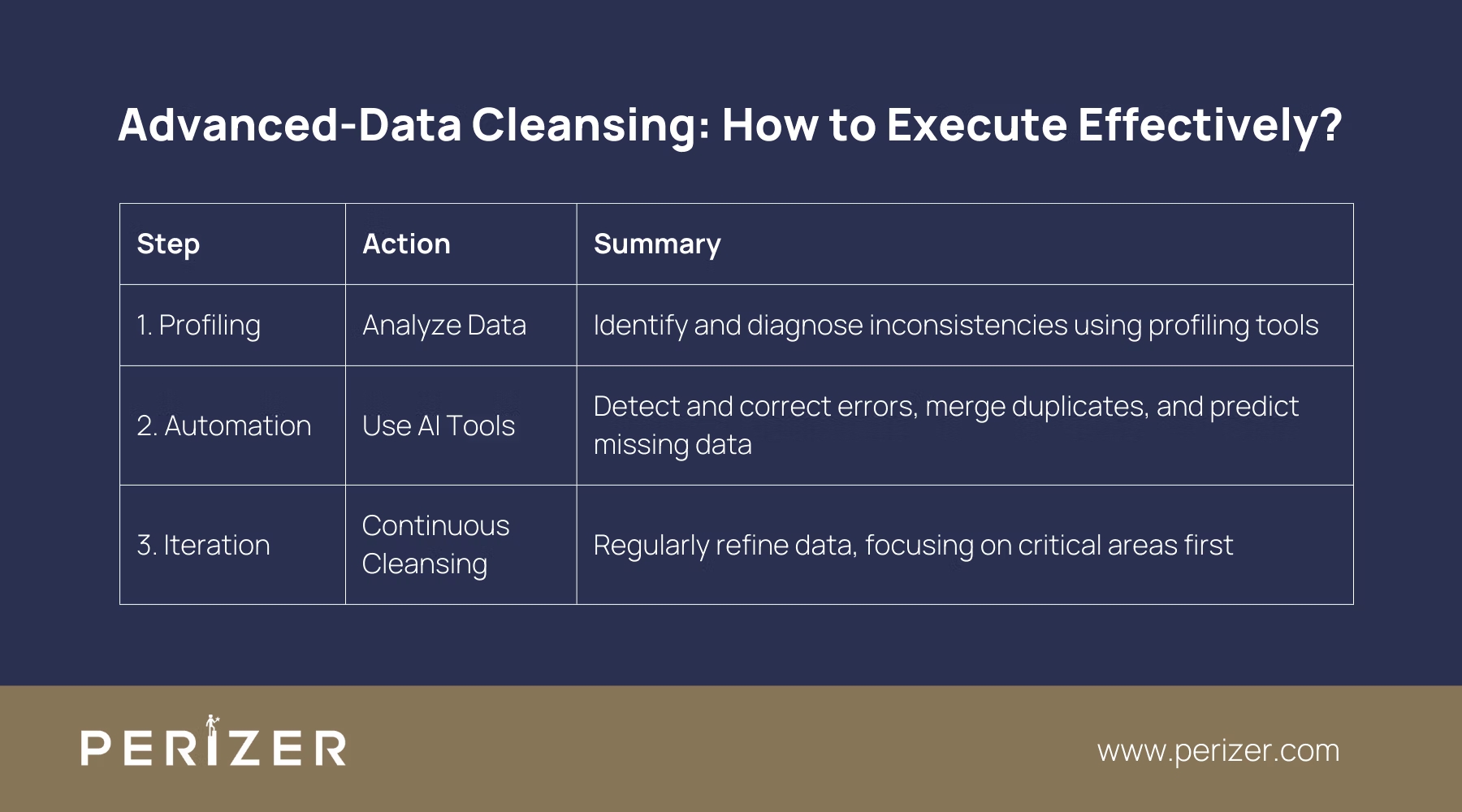

How to Execute Effective Data Cleansing?

1. You can start by conducting a thorough data profiling exercise. This involves analyzing the data to uncover inconsistencies, redundancies, and anomalies. Use advanced profiling tools that allow you to inspect the data at a granular level, enabling you to identify patterns and outliers that would otherwise go unnoticed. Diagnose the root cause of these issues - whether they stem from data entry errors, system migrations, or integration problems.

2. Implement automated tools that use machine learning algorithms to detect and correct data errors. These tools go beyond simple rule-based systems by learning from the data and improving over time. For instance, they can identify and merge duplicate records, standardize data formats, and even predict missing values with high accuracy. Automation not only speeds up the cleansing process but also ensures consistency across large datasets.

3. Treat data cleansing as an ongoing process rather than a one-time activity. Set up an iterative cleansing cycle where data is continuously reviewed and refined. Start with the most critical data - those elements that directly impact your business outcomes - and expand from there. Use feedback loops to learn from each cleansing cycle, adjusting your strategies to address emerging data quality issues as they arise.

3. Ensuring Data Accuracy Through Validation

Validation is your first line of defense against inaccurate data. By implementing strong validation checks at every stage of the data lifecycle, you can prevent errors from creeping into your datasets.

How to Implement Advanced Data Validation?

1. Set up real-time checks at data entry to catch errors instantly, before they spread. Use smart validation rules that adapt to the context - like automatically verifying date formats and ranges. These checks should not only flag issues but also suggest fixes on the spot.

2. Validate your data by cross-referencing it with multiple sources. This is especially useful for external data, like customer or supplier information. Automate this process with data integration tools that compare and highlight discrepancies, allowing you to resolve them before they become bigger problems.

3. Create complex validation rules specific to your data environment, combining business logic with statistical analysis. For example, flag sales data that looks off compared to historical trends. Use machine learning to continuously refine these rules, making them smarter and more accurate over time.

4. Improving Data Completeness

Incomplete data is a silent killer of data quality. It leads to flawed analyses and misguided decisions. Ensuring data completeness is crucial, and it requires a systematic approach.

How to Achieve and Maintain Data Completeness?

1. Start with a gap analysis to pinpoint missing data. Map out where your data should be and compare it to what’s there. Data mapping tools can make it easier to spot these gaps. Once you find them, dig into the root cause - whether it's an entry error, a system issue, or something else.

2. To fill in gaps, move beyond basic fixes. Use machine learning to predict missing values based on existing data patterns, and consider data augmentation techniques to generate additional data that fits these patterns.

3. Set up continuous monitoring to keep your data complete. Automate regular checks to catch new gaps, and use alerts to quickly notify your team when something’s missing. This keeps your data reliable over time.

5. Using Metadata for Improved Data Quality

Metadata, often overlooked, plays an important role in ensuring data quality. It provides context, improves traceability, and enhances the overall understanding of your data assets.

Effective Metadata Management

1. Establish strong metadata management practices by ensuring all metadata is accurately and consistently captured across your organization. This includes both technical details like data types and structures, and business metadata such as definitions and data lineage. Use management tools to catalog and organize this information, making it easy for everyone to access and use.

2. Improve your metadata by adding extra layers of information, like tracking data lineage, quality metrics, or usage statistics. This enrichment deepens your understanding of data reliability and relevance and helps troubleshoot issues more effectively by tracing errors back to their source.

3. Automate the capture of metadata to minimize human error and keep your metadata up-to-date. Automated tools can track data as it moves through your systems, recording changes in real-time, and maintaining a dynamic, accurate repository that evolves with your data.

6. Continuous Data Quality Monitoring and Improvement

Data quality isn’t a one-time task; it requires ongoing vigilance. Continuous monitoring and improvement should be integral to your data management strategy.

Data Quality Monitoring

1. Create data quality dashboards that give real-time insights into key metrics. These dashboards should be customizable so each team can focus on the metrics that matter most to them - like accuracy and completeness for finance, or timeliness and relevance for marketing. Clear visualizations help everyone quickly grasp the status of data quality.

2. Implement automated alert systems that notify you immediately when data quality issues arise. Set thresholds for these alerts, like when accuracy drops below a certain level or when too many records are incomplete. Make sure the alerts are actionable, with clear steps on how to fix the problem.

3. Establish regular feedback loops to continuously review and improve data quality. Hold meetings with data stewards, owners, and other stakeholders to discuss trends, root causes, and potential improvements. Use these discussions to refine your processes, learn from past issues, and adapt to new challenges.

7. Training and Culture: Enabling a Data Quality Mindset

Even with the best tools and processes, data quality ultimately depends on the people handling the data. Building a culture that prefers data quality is essential.

Building a Data Quality-Focused Culture

To create a culture that truly values data quality, start by offering advanced training for each team, focusing on what matters most to them - whether it's ensuring data integrity in finance or accuracy in customer data for marketing. Make quality checks a seamless part of your daily routine, with easy steps or automated checks that don’t add extra work.

Leadership should actively support these initiatives, pushing for the necessary resources and making it clear across the organization that data quality is a top priority, creating a culture of continuous improvement.

Conclusion

Data quality isn’t something you achieve once and forget about; it’s an ongoing challenge that demands a comprehensive approach and your attention. By putting these seven advanced strategies into practice - from establishing strong data governance to creating a culture that values quality - you can make sure your projects are built on a foundation of accurate, complete, and trustworthy data.

Your data is only as reliable as the quality controls you maintain, so keep refining your processes. With consistent effort, you'll make smarter decisions, develop more effective strategies, and ultimately see better results in your projects.

FOCUSED, FAST, GOVERNMENT READY

Stay Tuned With Our Latest Insights

Staff Augmentation

Learn how to select the perfect IT outsourcing partner to promote your team’s capabilities, improve productivity, and drRead more...

Staff Augmentation

Find the perfect staff augmentation partner by aligning your goals, evaluating expertise, managing costs, and ensuring aRead more...

Cyber Security

We focus on understanding the needs, behaviors, and expectations of your users through extensive user research. This infRead more...