7 Key Principles of Building a Scalable Software Architecture

Master the art of scalable software architecture with proven strategies in modular design, automation, and continuous improvement to future-proof your systems.

Every line of code you write today could be the difference between a system that scales smoothly and one that breaks under pressure. In a world where software must handle millions of transactions per second, building a scalable architecture is more than just an objective - it’s essential. If you’re deeply involved in managing complex systems, you know that scalability is about much more than simply adding resources.

But it doesn’t have to be that way. There’s a path forward that ensures your software doesn’t just keep pace but remains strong as demands grow. By focusing on a scalable architecture, you can create systems that not only meet today’s needs but are also prepared to tackle the challenges of tomorrow with confidence.

In this guide, we’ll explore the advanced strategies that will help you build a scalable software architecture, providing you with the insights and tools to ensure your systems are powerful and maintain your leading position in the market.

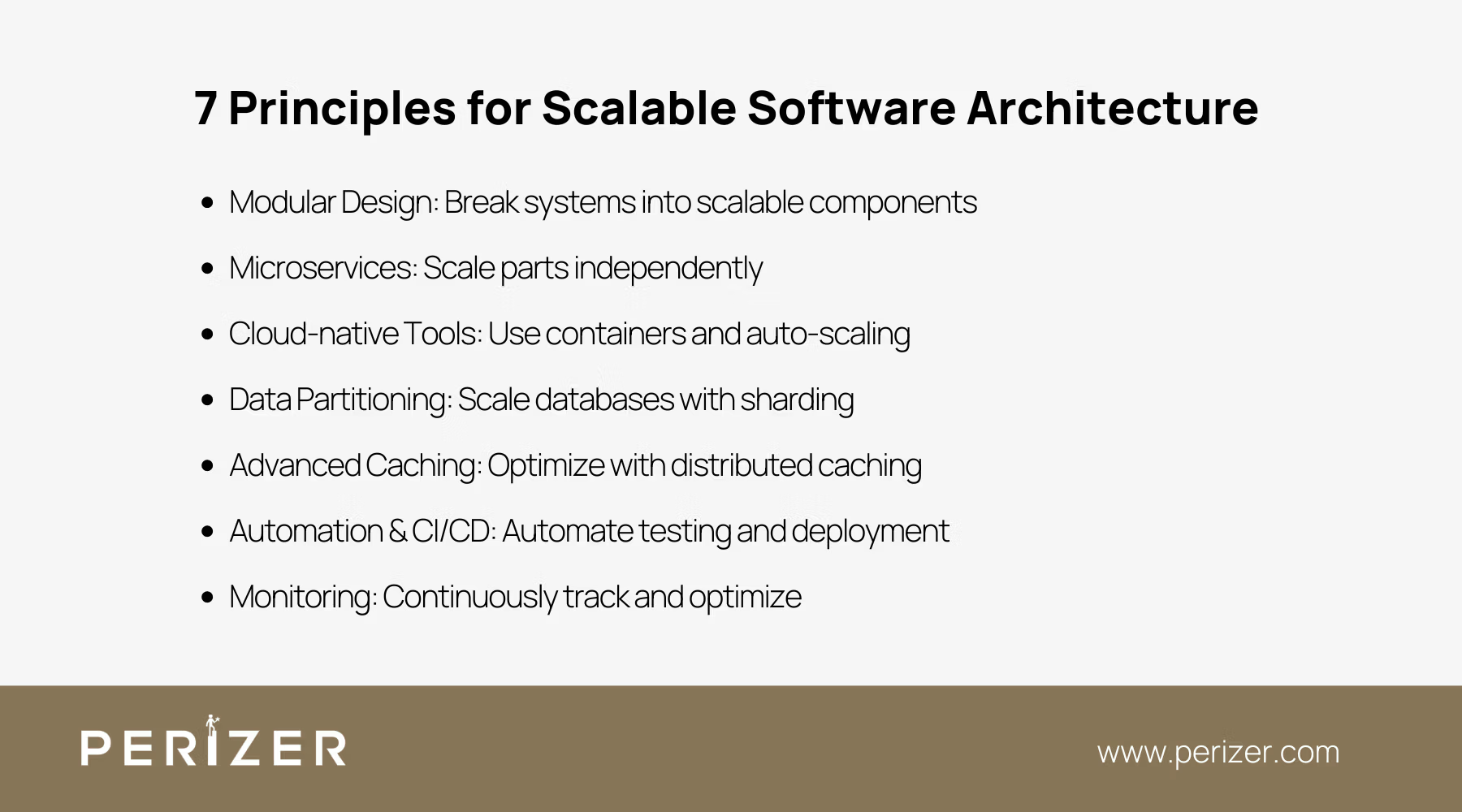

7 Principles for Scalable Software Architecture

1. Modular Design for Flexibility and Scalability

Modular design is an approach that breaks down a system into separate, interchangeable components, allowing for easier management, scalability, and flexibility. It’s often the foundation of scalable software architecture. Applying it effectively in large-scale systems requires careful planning, ensuring your software stays flexible as it expands.

1. Component Decoupling: The first step is to break your system into distinct, loosely coupled modules. Decoupling doesn’t just prevent your application from becoming a tangled mess; it also allows you to scale each module independently. When one part of the system experiences a spike in demand, you can allocate more resources to it without affecting the rest of the system.

2. Inter-module Communication: Next, focus on efficient communication between these modules. Use asynchronous communication methods, such as message queues, to ensure that data flows smoothly without creating bottlenecks. By designing your modules to interact through well-defined interfaces, you maintain a level of abstraction that makes future scaling efforts more manageable.

3. Scalability in Action: By decoupling important components, such as payment processing from inventory management, you enable each part of your system to scale independently. This approach ensures that as demand increases, your system can handle the load efficiently without compromising performance or user experience.

2. Adopting Microservices Architecture

Transitioning to a microservices architecture is another powerful way to build a scalable software system. While the move from a monolithic structure to microservices can be complex, the benefits in terms of scalability are undeniable. But how do you implement this architecture without running into common pitfalls?

1. You can start by identifying the core functionalities of your system and breaking them down into individual services. This decomposition allows each service to be developed, deployed, and scaled independently. The key here is to avoid over-complicating things - each service should be small enough to be manageable but large enough to provide value on its own.

2. Handling data in a microservices environment can be tricky. You need to decide whether each service should manage its database or share a common one. The best practice is to give each service its own data store, ensuring that data remains consistent within the service. However, you must also implement strategies for maintaining consistency across services, such as the Saga pattern, which coordinates transactions that span multiple services.

3. Efficient communication between services is crucial. Opt for lightweight protocols like HTTP/REST or gRPC for synchronous communication and use event-driven architecture for asynchronous interactions. Implementing a service mesh, such as Istio, can further help manage the complexities of service-to-service communication, providing features like load balancing, service discovery, and security.

Despite its advantages, microservices architecture comes with challenges, such as increased complexity and the potential for data inconsistency. However, by adopting powerful monitoring tools and following best practices in service design, you can mitigate these risks and build a highly scalable system.

3. Effective Use of Cloud-native Technologies

Cloud-native technologies provide a flexible and powerful environment that makes scaling much easier than with traditional infrastructures. The real challenge is knowing how to fully tap into these tools to get the most out of them.

1. Containerization

You can start by adopting containerization. When you package your applications into containers, you create a consistent environment for deployment, no matter what infrastructure you're working with. Tools like Docker simplify this process, allowing you to containerize your applications easily. Then, tools like Kubernetes can help you manage these containers at scale by orchestrating them across a cluster of machines.

2. Serverless Computing

Serverless computing takes scalability even further. With a serverless architecture, you don’t need to worry about managing servers yourself. Instead, you write functions that the cloud provider automatically scales based on demand. For example, AWS Lambda can run your code in response to specific events, effortlessly scaling from zero to thousands of requests per second.

3. Auto-scaling Mechanisms

Auto-scaling is important for maintaining performance under varying loads. Cloud platforms like AWS, Azure, and Google Cloud offer built-in auto-scaling features that automatically adjust resources based on real-time metrics. By setting up policies that define when and how to scale your services, you can ensure your application handles fluctuations in traffic without the need for constant manual adjustments.

4. Data Partitioning and Sharding Strategies

As your system grows, so does your data. Scaling your database becomes a critical challenge, and this is where data partitioning and sharding can help. This is where strategies like data partitioning and sharding come into play, making it much easier to manage the increasing data load efficiently.

1. Horizontal vs. Vertical Partitioning: Horizontal partitioning, or sharding, involves splitting your data across multiple tables or databases based on a specific criterion, such as user ID. This allows each shard to handle a subset of the data, reducing the load on any single database. Vertical partitioning, on the other hand, divides data based on columns, storing different types of information in separate tables or databases.

2. Sharding Techniques: When implementing sharding, decide on a strategy that minimizes the impact on your application’s performance. For example, range-based sharding divides data based on a key range, while hash-based sharding distributes data evenly by applying a hash function to the key. The key is to balance the load across shards while ensuring that queries can still be executed efficiently.

3. Consistency and Availability Trade-offs: Partitioning and sharding inevitably introduce trade-offs between consistency and availability. To maintain consistency, consider implementing distributed transactions or using eventual consistency models where absolute consistency is not critical. However, you must carefully design your system to handle scenarios where data might be temporarily inconsistent.

5. Advanced Caching Mechanisms

Caching is most important when it comes to scaling software systems. By reducing the load on your databases and speeding up data retrieval, caching helps keep your system running smoothly as demands grow. But to get the most out of caching, you need to plan and execute your strategy carefully.

1. Distributed Caching Systems

Start with distributed caching systems like Redis or Memcached. These tools store frequently accessed data in memory, which means your application can retrieve it quickly without constantly querying the database. In a distributed setup, make sure your cache is replicated across multiple nodes to prevent a single point of failure from bringing down your system.

2. Cache Invalidation Strategies

Keeping your cache fresh is key to preventing outdated data from causing issues in your application. Implement cache invalidation strategies like time-to-live (TTL) settings, which automatically expire cache entries after a certain period. You can also use cache tags to group related entries, so you can invalidate them all at once when the underlying data changes.

3. Tiered Caching Approaches

Think about using a tiered caching strategy, which involves multiple layers of caches. For example, you might have a local cache on the application server and a global cache shared across the cluster. This setup reduces latency by serving data from the nearest cache layer, only falling back to more distant layers when absolutely necessary.

A content delivery network (CDN) is a great example of advanced caching in action. By caching content at various locations around the world, a CDN reduces the load on the origin server and ensures users get their content quickly, no matter where they are.

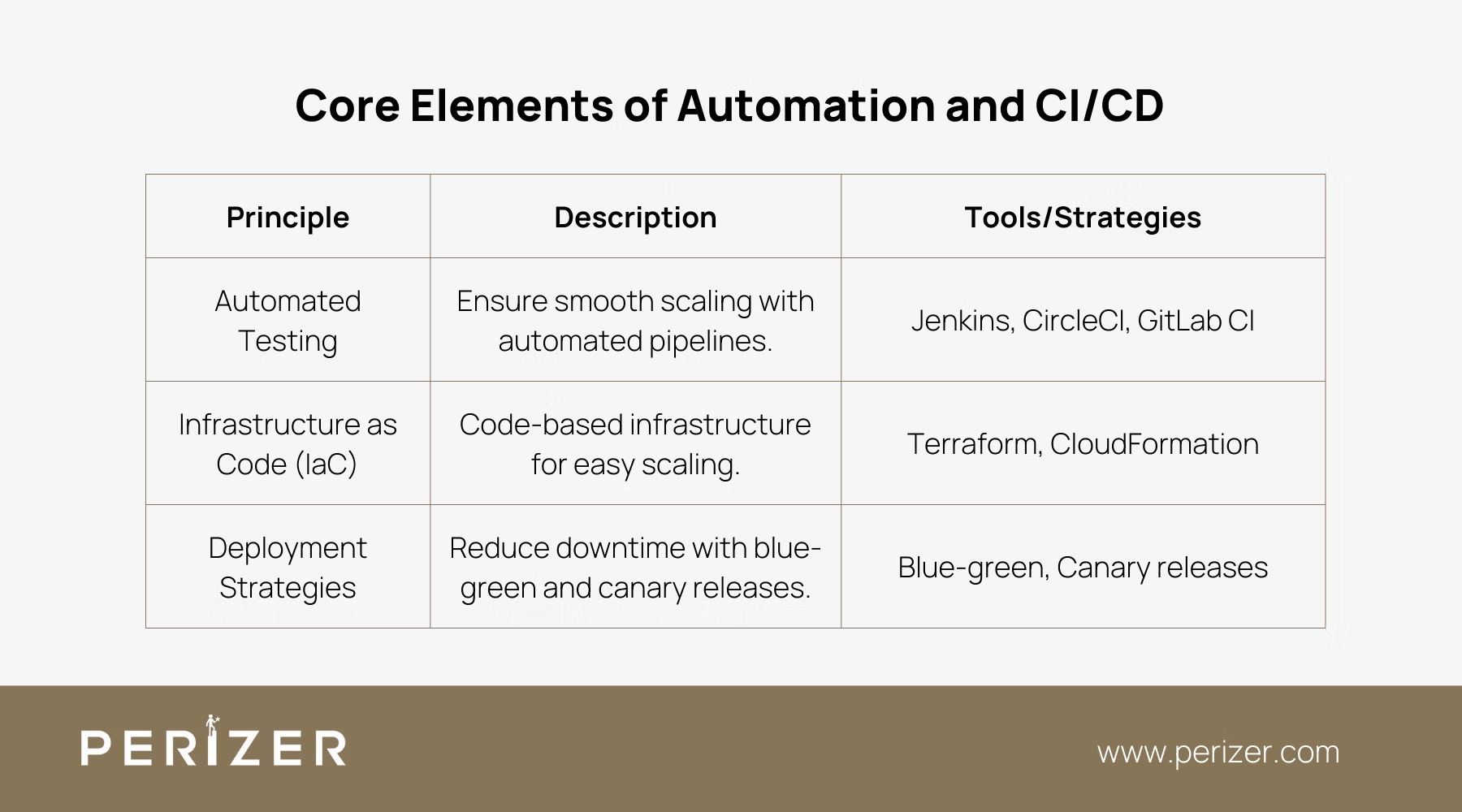

6. Automation and Continuous Integration/Continuous Deployment (CI/CD)

Automation isn’t just a nice-to-have; it’s essential for keeping your systems scalable. Continuous Integration and Continuous Deployment (CI/CD) pipelines play a crucial role in ensuring that your software can grow and adapt quickly without running into problems.

1. Automated Testing Pipelines

One of the first steps is setting up automated testing pipelines that check your code at every stage of development. This way, you can be confident that new features or bug fixes won’t cause unexpected issues, allowing your software to scale smoothly. Tools like Jenkins, CircleCI, or GitLab CI can help automate the entire process of building, testing, and deploying your software.

2. Infrastructure as Code (IaC)

Trying to manage scalable infrastructure manually can lead to all sorts of headaches. Instead, use Infrastructure as Code (IaC) tools like Terraform or AWS CloudFormation. These tools let you define your infrastructure with code, making it easy to replicate environments, apply changes consistently, and scale your infrastructure as your needs grow.

3. Deployment Strategies

The next important step is choosing the right deployment strategy to minimize downtime and ensure smooth updates. One effective approach is blue-green deployments, where you maintain two identical environments-one live and one idle. You roll out new changes to the idle environment first, and if everything looks good, you seamlessly switch the traffic over without causing disruptions.

Another approach is canary releases, where you gradually introduce changes to a small group of users before rolling them out to everyone. This way, you can catch and address any potential issues early on.

7. Monitoring and Observability for Proactive Scaling

Scaling your software architecture successfully isn’t just about building it right; it’s about keeping a vigilant eye on it as it grows. For you, a proactive approach to monitoring and observability is important to catching potential issues before they start affecting your system’s scalability.

1. Distributed Tracing

Begin with distributed tracing to closely monitor how your microservices are performing. Tools like Jaeger or Zipkin can trace requests as they move through your system, helping you spot performance bottlenecks that might need attention. By understanding how each service interacts with the others, you can make the best decisions about where to focus your scaling efforts.

2. Real-time Metrics Collection

Keeping an eye on real-time metrics is also significant to maintaining your application’s health. Tools like Prometheus or Grafana can help you visualize these metrics and set up alerts for anything unusual. Pay close attention to key performance indicators (KPIs) like response times, error rates, and resource utilization - these are the metrics that will most directly impact your system’s ability to scale effectively.

3. Alerting and Automated Responses

Don’t wait until issues escalate. Set up automated alerts that notify you as soon as a problem starts brewing. Combine these alerts with automated responses, like scaling resources or restarting services, so you can address issues immediately. Tools like PagerDuty can integrate seamlessly with your monitoring system to provide you with on-call alerts and help you manage incidents without delay.

4. Tools and Techniques

Picking the right tools for monitoring and observability is most important to keeping your architecture in check. Based on what your system needs, you might find that a combination of open-source tools and cloud-specific solutions like AWS CloudWatch or Azure Monitor works best. This approach helps you ensure that everything is covered, so your system keeps running smoothly.

Conclusion

Developing a scalable software architecture is a continuous process that requires strategic thinking, persistent effort, and adaptability. By focusing on modular design, efficient data management, automation, and vigilant monitoring, you can build a system that stands up to today’s demands and is ready to grow with your needs. Remember, scalability isn’t something you achieve once - it’s a commitment to regularly refining and improving your architecture to stay ahead.

Adopt modular design, use cloud-native solutions, and make continuous monitoring and optimization the foundation of your approach.

FOCUSED, FAST, GOVERNMENT READY

Stay Tuned With Our Latest Insights

Staff Augmentation

Learn how to select the perfect IT outsourcing partner to promote your team’s capabilities, improve productivity, and drRead more...

Staff Augmentation

Find the perfect staff augmentation partner by aligning your goals, evaluating expertise, managing costs, and ensuring aRead more...

Cyber Security

We focus on understanding the needs, behaviors, and expectations of your users through extensive user research. This infRead more...